GeoAI in 2026: Four predictions on agents, MCP, and live data

Insights from Ian Ward at Geoawesome Live

Large language models (LLMs) are rapidly becoming the interface for how people search, plan, and make decisions. To ensure AI reasoning can meaningfully tackle real-world questions, reasoning must be paired with reliable location context, data, and geospatial tooling.

At Geoawesome's “The Future of GeoAI: A 2026 Industry Forecast”, Mapbox founding team member and engineering leader on the Location AI team, Ian Ward, shared how GeoAI is evolving and what we can expect in the next two years for location-aware AI to achieve. His talk connected today’s realities, emerging standards like Model Context Protocol (MCP), and the rise of specialized agents that rely on geospatial data. Watch the full presentation recording, starting at the 12:32 mark here.

Prediction #1: Geospatial will be core to the search and query experience

Ian shared his first prediction that by 2026, web search and geospatial tools will be tightly integrated into the major LLMs and assistants. Location and mapping capabilities will not be considered ‘add‑ons’; they will be built‑in tools that the models can call, similar to how Google is integrating Maps into Gemini. In other words, useful GeoAI will mean LLMs that can reason based on live geospatial services grounded in real-world data.

Why large language models need geospatial intelligence

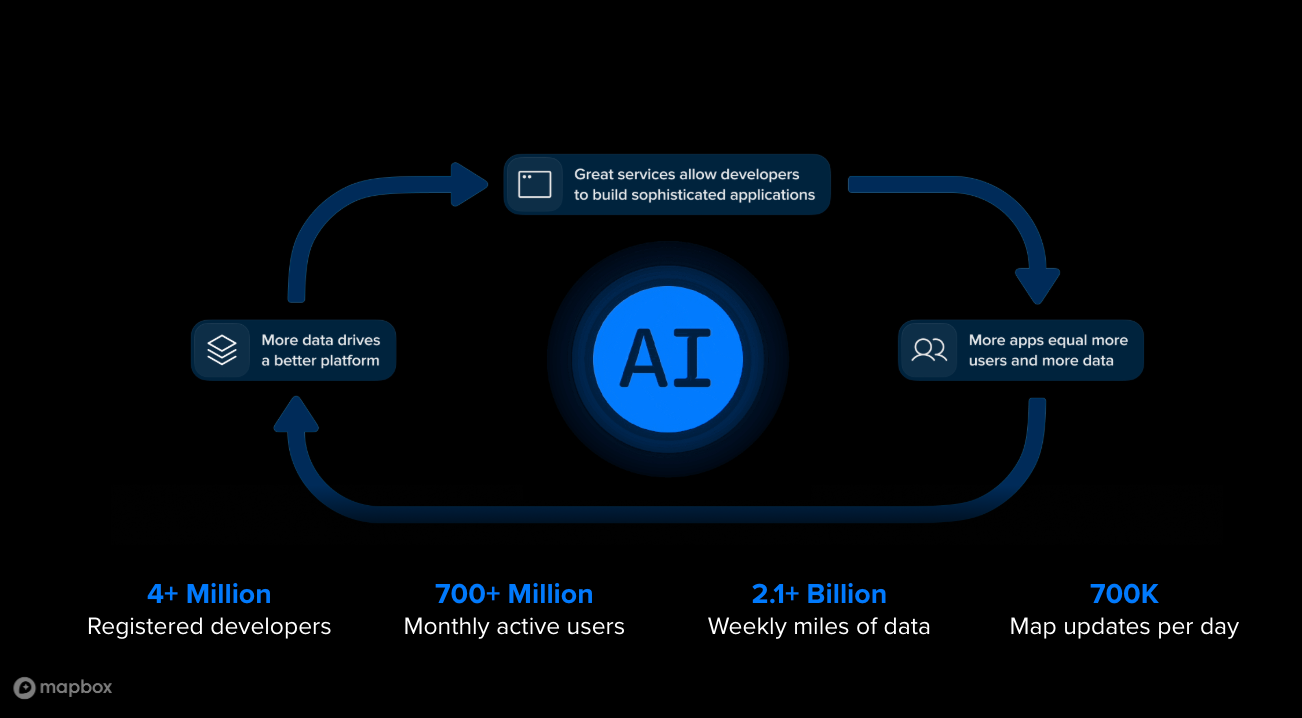

Ian began with an overview of what Mapbox powers today across industries like travel, recreation, on-demand logistics, automotive navigation, and more. He then turned to a core issue: large language models are not designed to answer time-sensitive, real-world questions on their own.

LLMs frequently return outdated information because their training data stops months or years before inference. Even small changes, like a stadium renaming, can lead to incorrect answers unless a model has access to live data or web search. The gap becomes larger with location-dependent tasks such as planning a trip, checking traffic, or finding open businesses near a venue. A static model simply does not reflect newly opened or closed businesses, updated road networks, or evolving travel conditions.

Prediction #2: MCPs will get better at handling large geospatial data

To illustrate how models access real-world services, Ian started with the Model Context Protocol (MCP) concept. MCP is an open standard, first proposed by Anthropic, that defines how external APIs, data sources, and actions are exposed to LLMs and agents. MCP servers advertise what tools they offer and how to call them. Platforms such as Anthropic and OpenAI allow these servers to plug directly into their agent frameworks.

MCP adoption is moving quickly. Thousands of MCP servers already exist, and the recently launched ChatGPT apps run on MCP under the hood. Ian noted that the protocol is becoming a common interface for tool integration across the AI ecosystem. One of the biggest challenges today is handling large data payloads, such as long driving-directions responses or large GeoJSON objects — which can be difficult for models to process efficiently. Ian expects the MCP specification to continue improving resource handling and summarization, making it better suited for geospatial workloads that involve large, complex data.

Mapbox has developed two MCP servers designed for different use cases: one for AI agents working with location data and another for developer productivity.

Mapbox MCP Server: Geospatial intelligence for AI agents

The Mapbox MCP Server exposes core location APIs, for example – geocoding, search and POI search, routing, isochrones, matrix routing, and static images – as callable tools for models. This allows an LLM to chain spatial operations together into multistep reasoning workflows. Ian shared a common example: trip planning. An agent can geocode a list of hotels and attractions, call matrix or directions APIs to compute travel times, compare configurations, and present a recommendation supported by a map. Equipped with these tools, the model moves from text generation to functioning as a location consultant.

Ian pointed to existing customer examples like Tripadvisor’s ChatGPT app, which already embeds Mapbox maps inside ChatGPT. With MCP, these applications can go further – using current real world data to estimate travel times, filter restaurants by distance and walkability, or surface personalized recommendations.

Mapbox DevKit MCP Server: Give coding agents direct access to Mapbox

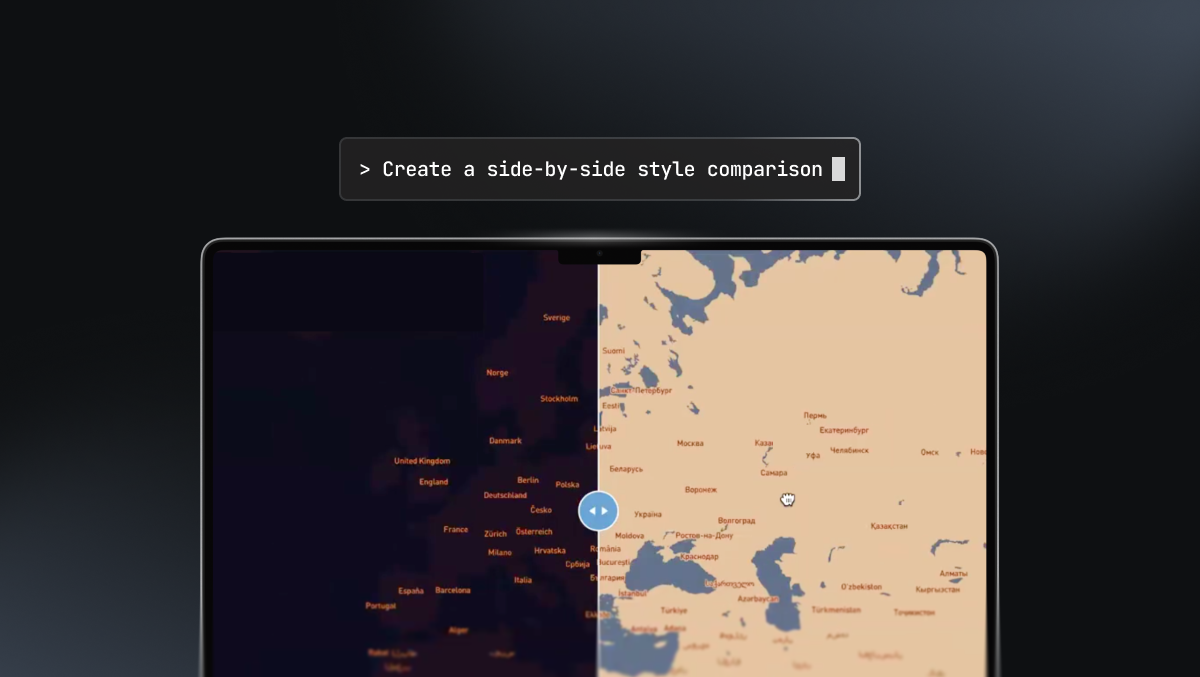

Coding-oriented agents are becoming a core part of the developer workflow. The second Mapbox MCP server, the Devkit, focuses on developer experience. Coding-oriented agents, such as Claude Code, can use the DevKit to handle routine Mapbox tasks: creating access tokens, generating or editing styles, comparing styles, and previewing maps. This reduces the friction of switching between dashboards and development environments.

Prediction #3: An explosion of specialized agents – with geospatial skills

Ian placed MCP in a broader context: nearly every major platform now provides an agent framework – OpenAI Agent Builder, AWS AgentCore, Microsoft Copilot Studio, Oracle’s AI Agent Platform, and open-source frameworks like Smolagents, CrewAI, and LangGraph. The infrastructure for agent orchestration is now established. The next wave will be highly specialized agents built for specific domains, with geospatial applications playing a significant role. He highlighted three families of geospatial agents already in progress at Mapbox: the Feedback Agent, Navigation Agent for in-vehicle, and the Location Agent.

Mapbox Feedback Agent

Mapbox Feedback Agent allows users to send voice feedback without interrupting navigation or taking eyes off the road. The Agent can understand natural language, even for complex scenarios or multipart issues. Feedback Agent also analyzes submissions, breaks conversations into discrete and categorized issues, and adds relevant metadata.

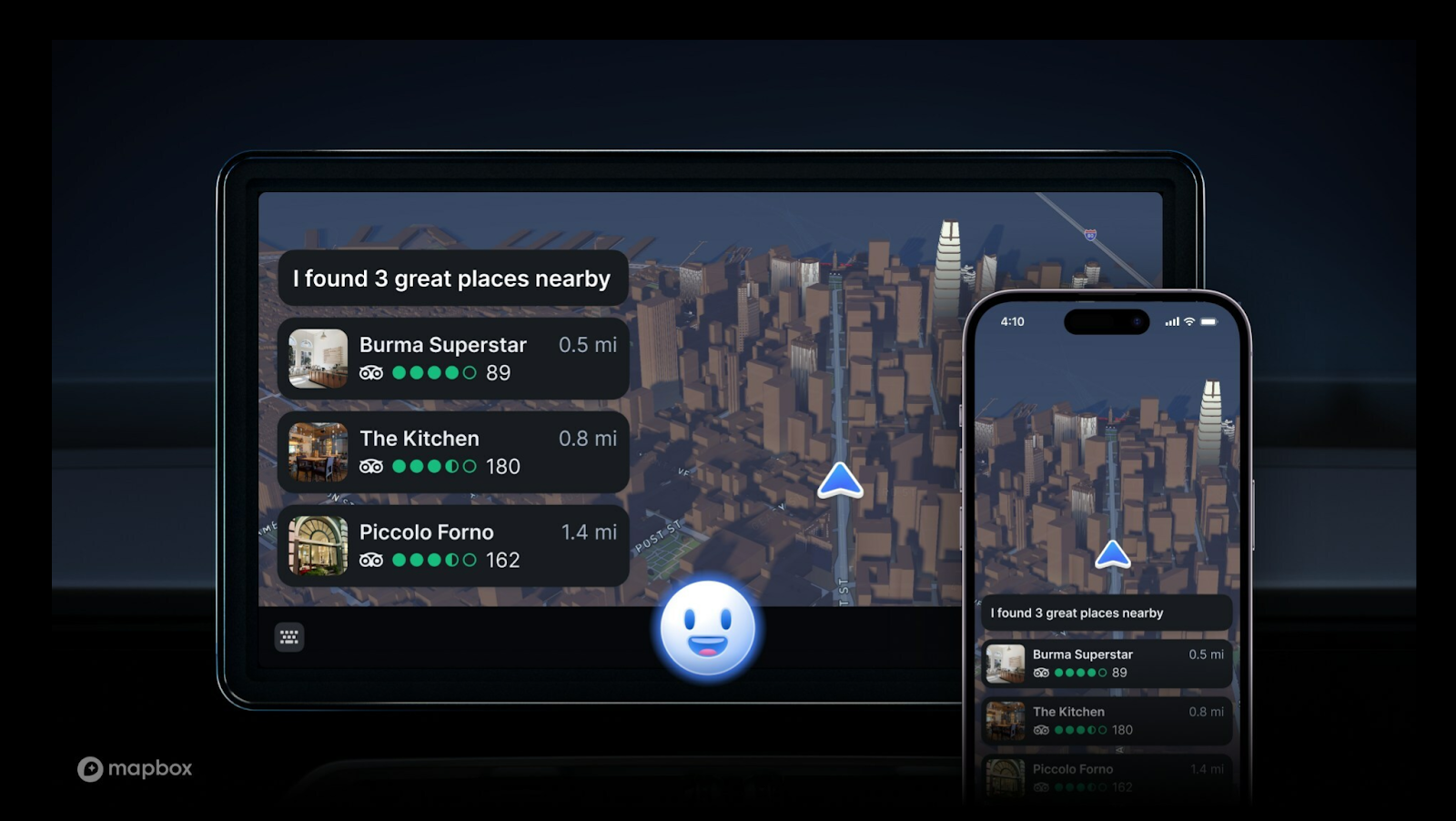

MapGPT for in-car navigation

MapGPT is an in-vehicle AI assistant that combines LLM conversation with Mapbox routing and search. Automakers can integrate MapGPT into vehicles to handle natural-language navigation requests, support multi-turn dialog, and enable a voice-based driver interface to control and query vehicle systems.

The Mapbox Location Agent

The Mapbox Location Agent is a demonstration of what is possible when you connect the Mapbox MCP server to an agent, enabling it to call backend geospatial tools and directly manipulate a frontend Mapbox GL JS map. In Ian’s example query to “find hotels with a view of the Sphere in Las Vegas,” the agent not only identifies candidate hotels but also controls the map’s pan, zoom, bearing, and pitch to illustrate the view. The entire user experience is driven by the agent’s reasoning cycle.

Many organizations are adapting this new ‘conversational map’ pattern for tasks like site selection, trip planning, and operational planning – scenarios that benefit from an AI co-pilot capable of manipulating geospatial data and visualizations.

Prediction #4: Dynamic, up-to-date data becomes default infrastructure for AI agents

Ian’s fourth prediction focused on dynamic data. By 2026, fresh, real-time information will shift from a ‘nice-to-have’ to a core requirement for agentic systems. Agent platforms will expect seamless access to live data sources, and to support geospatial queries, that means continuously updated maps, traffic conditions, points of interest, and spatial layers.

He highlighted how a constant flow of data updates already underpins Mapbox APIs. Mapbox processes hundreds of billions of location updates per day, hundreds of millions of miles of sensor data, and maintains POIs and addresses that continuously change. Road networks are updated as construction, closures, and reconfigurations occur. This volume of live data enables agents to return answers grounded in the current world, not just a snapshot of the world at training time.

Across industries, organizations are generating real-time data streams – from mobility and environmental sensors to weather, financial activity, and infrastructure systems. For agents meant to operate in the physical world, access to dynamic data is becoming a requirement, not an optional integration.

Closing thoughts

Ian closed by outlining the emerging GeoAI stack: LLMs that reason and converse, MCP as the bridge to tools and data, agent frameworks that orchestrate multistep tasks, and current geospatial data that grounds decisions in the real world. Together, these elements enable agents to understand, reason about, and act on the physical environment and position Mapbox as the live-location layer these systems depend on.

Watch the full presentation recording, starting at the 12:32 mark here. Ready to start building? Explore the tutorial: build your own agent with Mapbox MCP Server or connect with the Mapbox Location AI team to collaborate on the future of conversational maps.